OutCast: Single Image Relighting with Cast Shadows

EuroGraphics 2022

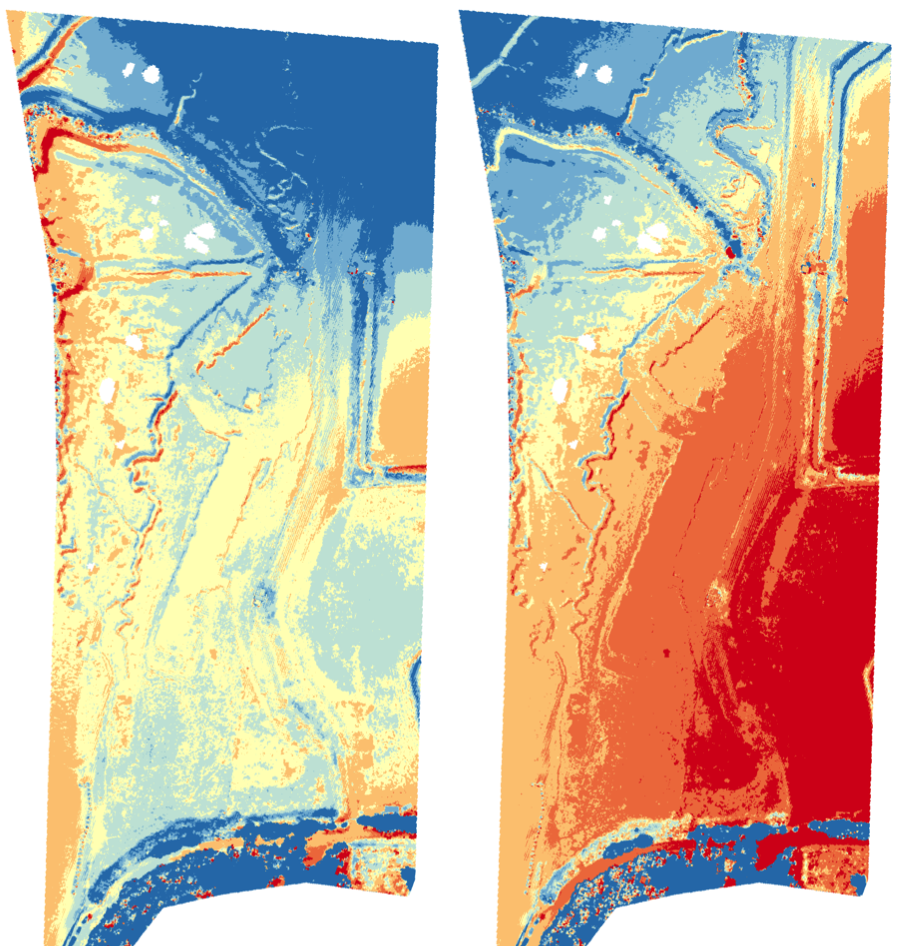

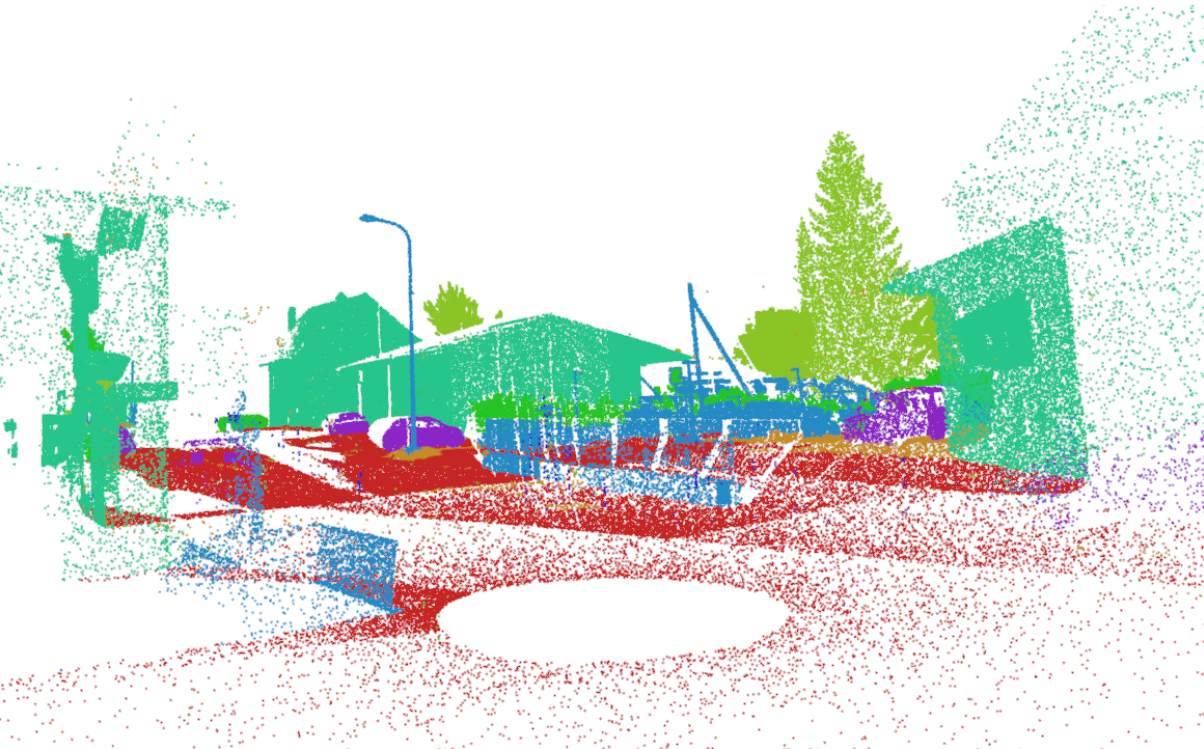

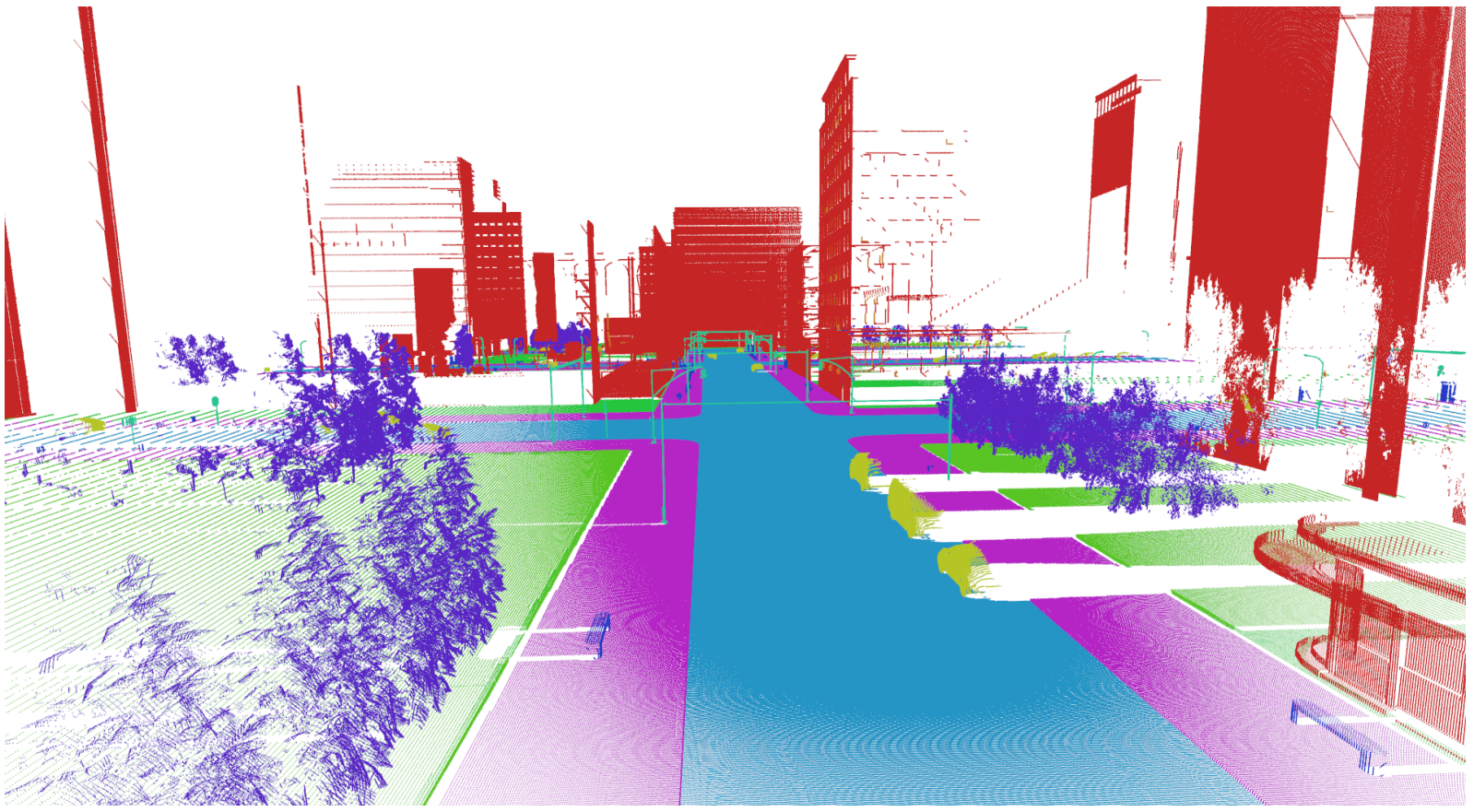

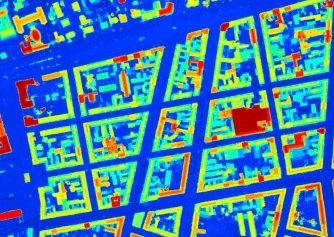

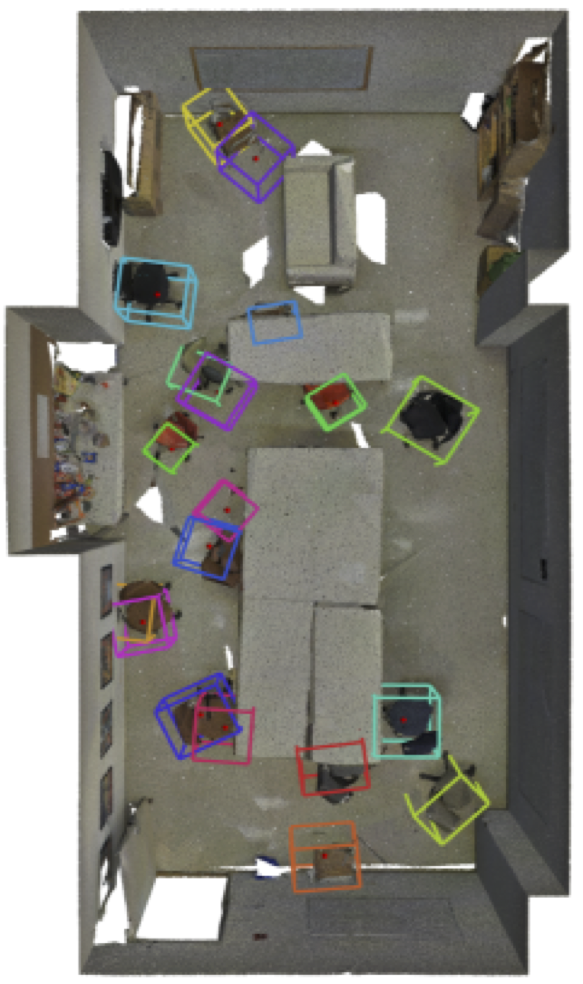

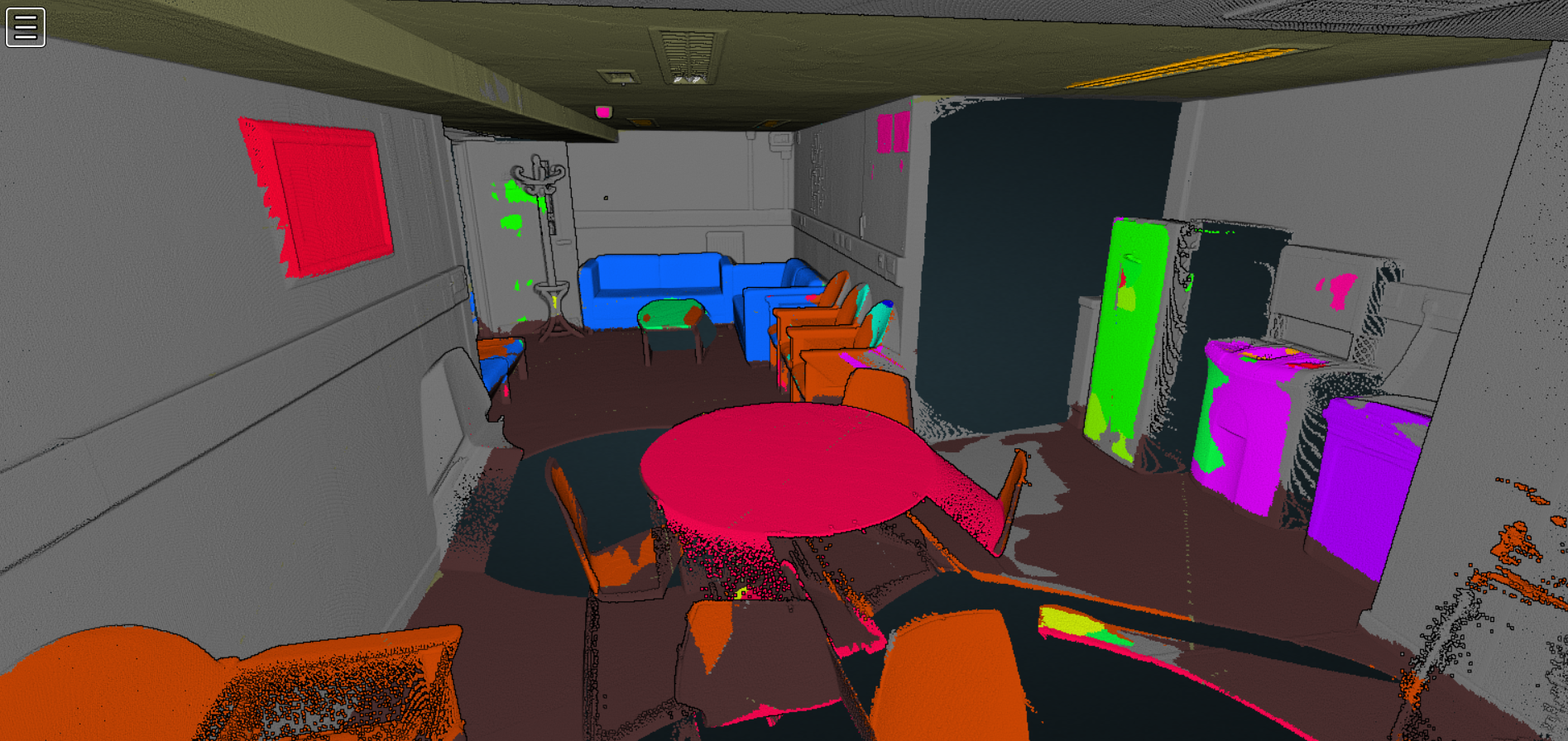

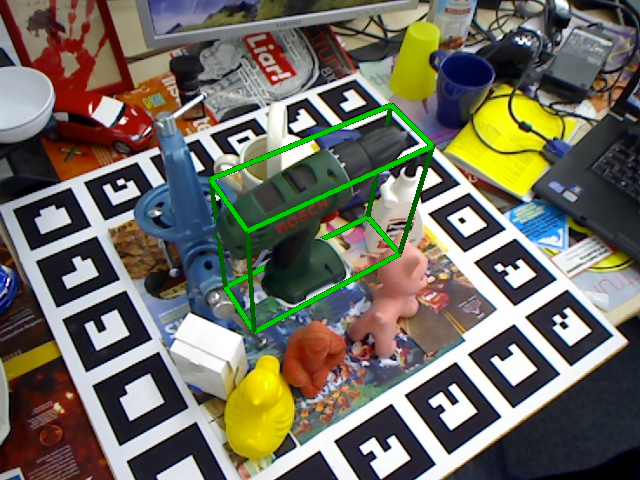

We address the problem of single image relighting. Our work shows monocular depth estimators can provide sufficient geometry when combined with our novel 3D shadow map prediction module.